Duplicate Content: Causes And Solutions

Table of contents

Duplicate content generally refers to substantive blocks of content inside or across domains that either completely matches other content or are noticeably similar according to the Jacksonville SEO experts. The majority of the time, this is not deceitful in nature.

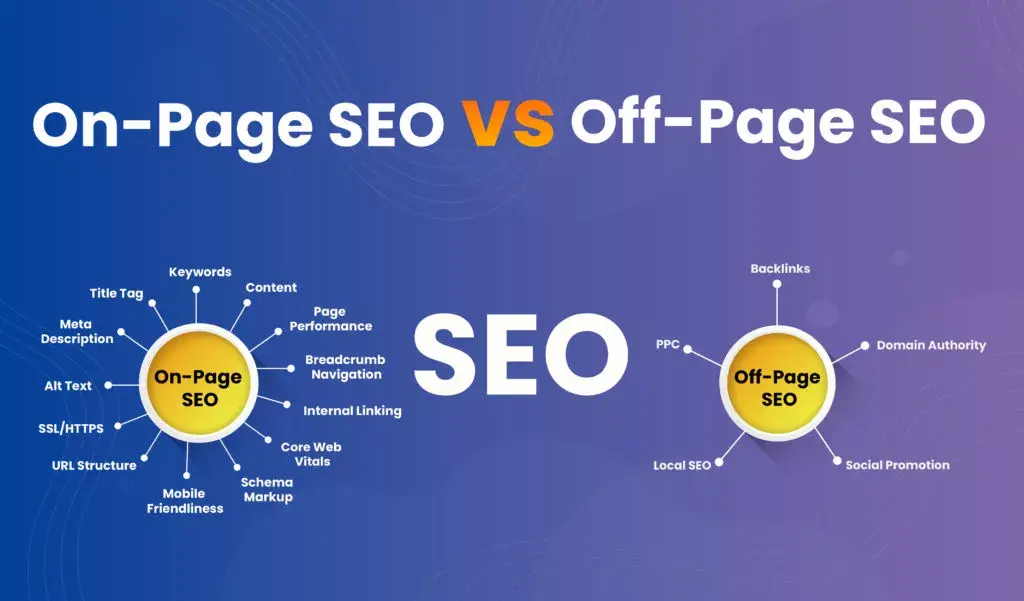

On-page duplicate content is the most obvious place to look for it; nevertheless, duplicate titles and meta descriptions are also counted as duplicate content and can be difficult for search marketers to discover and update without the help of a duplicate content checker.

According to ex-Google engineer Matt Cutts, between 25 and 30 percent of the web’s information is copied. It’s easy to see how this happens: without malicious purpose, generic product descriptions, boilerplate content, or marketing slogans are frequently replicated across domains and pages.

Duplicate material is recognized by search engines, which is why, despite assertions to the contrary, duplicate content does not result in a Google penalty. In case, if you are suffering from any have a look at this guide on how to identify and recover from Google penalties.

Copied content is one thing that will get you a Google penalty. When spammers scrape content from an original source and republish it on their own site, this is known as copied content. The copied material, like duplicate content, results in two web pages containing similar sections of content; but, unlike duplicate content, copied content is done on purpose, does not offer value to the reader, and frequently involves a low-quality website.

Scraped content is taken seriously by search engines, which might penalize the scraping site. Allowing crawlers to know that your site’s content is not scraped from other sources is a smart idea.

Duplicate content instances on the same domain

This form of duplicate content might be found on your e-commerce site, blog articles, or website, as you can see. Consider duplicate content to be the identical content that occurs on many pages on your website.

It’s possible that: This content is available in several locations (URLs) on your site; or, it’s accessible in a variety of methods (hence resulting in different URL parameters). These could, for example, be the same postings that appear when a search is performed.

Let’s have a look at some examples of duplicate material on the same website.

- Content of the boilerplate:

Simply said, boilerplate information can be found in many sections or web pages throughout your website. Boilerplate content is defined by Ann Smarty as:

- Global navigation (across the entire site) (home, about us, etc.)

- Specific places, especially if links are included (blogroll, navigation bar)

- Markup (JavaScript, names of CC id/classes like header, footer)

A header, a footer, and a sidebar are common features of a standard website. Aside from these features, most CMSs allow you to display your most current or most popular posts on your homepage. When search bots crawl your site and get the same content displayed across your site, it will be identified as duplicate content. But this type of duplicate content cannot harm your SEO as Search engine bots can understand the intention behind this content duplication is not malicious. So, you’re safe according to the experts from Jacksonville SEO Company.

- URL structures that aren’t consistent:

Have a look at the URLs below –

- www.yoursite.com/

- yoursite.com

- http://yoursite.com

- http://yoursite.com/

- https://www.yoursite.com

- https://yoursite.com

Do they have the same appearance to you?

Yes, you are correct; the destination URL is identical. As a result, they have the same meaning for you. Regrettably, search engine algorithms interpret these as distinct URLs. The July 2021 core Google SEO update was a comprehensive upgrade that marginally altered the entire algorithm but did not affect any particular function.

When search engine bots see the same material on two separate URLs, such as http://yoursite.com and https://yoursite.com, they treat it as duplicate content. This issue also affects URL parameters generated for tracking purposes. Duplicate content concerns can also be caused by URL parameters with tracking.

- Localized domains

Assume you serve customers from various regions and have generated localized domains for each one. You might have a.de version of your website for Germany and a.au version for Australia, for example. It’s only natural that the content on these two sites will overlap. Search engines will consider your material to be duplicated across both sites unless you translate it for the.de domain. In such circumstances, Google will display one of these two URLs when a searcher looks for your business. The status of the searcher is frequently seen by Google. Let’s pretend the searcher is in Germany. By default, Google will only display your.de domain. Google, on the other hand, might not get it right.

Duplicate content instances across many domains

- Content that has been copied:

Copying content from a website without permission is unethical, according to Google. Your site will be at risk if you only provide duplicate material, especially now that the Panda Update is in effect. Google may not include it in the search results at all, or it may be pushed to the bottom of the first few pages.

- Curation of content:

The act of finding articles and writing blog posts that are relevant to your viewers is known as content curation. These tales might have come from anywhere on the Internet, including site pages and social media.

It’s natural for a content curation post to contain duplicate material (even if it’s only duplicate titles) because it gathers a list of content pieces from throughout the web. The majority of blog postings also use snippets and quotes. Again, Google does not consider this to be SPAM. Google will not consider content duplication malicious if you contribute some insight, a fresh perspective, or explain things in your own style, so you won’t have to worry about rel canonical tags, session IDs, and the rest say the SEO experts.

- Syndication of content:

Content syndication is becoming a more common method of content management. Curate discovered that syndicated material contributed 10% to the perfect content marketing mix.

“Material syndication is the act of putting your blog, site, or video content out into third-party sites, as a complete article, snippet, link, or thumbnail,” according to Search Engine Land. Sites that syndicate material allow their content to be shared across multiple platforms. This means that any syndicated post has many copies.

If you’re familiar with the Huffington Post, you’ll know that you can syndicate your articles there. Every day, it collects and republishes stories from throughout the web with permission.

The easiest way to syndicate content is to request that republishing sites acknowledge you as the original content provider and link back to your site using proper anchor language.

- Scraping content

When it comes to duplicate content issues, scraping content is always a grey area. Web scraping (also known as content scraping) is defined by Wikipedia as Web scraping (also known as web harvesting or web data extraction) is a technique for obtaining data from websites using computer software. Surprisingly, Google scrapes data in order to present it on the first SERP.

What counts as duplicate content and what doesn’t?

DUPLICATE material is stuff that has been translated. You won’t have any content duplication issues if you have a site that has been localized for multiple nations and translated your core material into the local languages.

If you don’t have a responsive website, it’s possible that you’ve created a distinct mobile version of your main website.

As a result, you’ll have multiple URLs serving the same content, such as:

Web-version: http://yoursite.com

http://m.yoursite.com/ – Mobile version of your site

It is not considered duplicate material if your web and mobile site versions have the same content. You should also be aware that Google has several search bots that crawl mobile sites, so you shouldn’t be concerned.

Problems caused by duplicate content

1. The first issue is the dilution of link popularity.

When you start link-building and don’t specify a consistent URL structure for your site, you’ll end up developing and distributing multiple versions of your site links. Here are top link building strategies that can help you.

Consider the following scenario: you’ve constructed a massive resource that has generated a ton of inbound links and visits from a large number of session IDs. However, you don’t see the original source’s page authority rise as much as you thought. Despite all of the links and traction, why hasn’t the page authority increased?

Perhaps it didn’t because different backlinking sites used different variations of the resource URL to link back to it.

Like:

http://www.yoursite.com/resource

http://yoursite.com/resource

http://yoursite.com/resource and so on…

Do you see how failing to grasp duplicate content management sabotaged your efforts to create a better authority page?

All of this happened because search engines couldn’t figure out that all of the URLs led to the same destination.

2. URLs that aren’t user-friendly

When Google comes across two resources on the web that is almost identical or comparable, it shows the searcher one of them. Google will, in most cases, choose the most appropriate version of your content. However, it does not always get it correctly. It’s possible that Google will display a less-than-appealing URL version of your site for a specific search query.

For instance, if a searcher were looking for your company online, which of the following URL parameter possibilities would you like to offer them:

http://yoursite.com/overview.html or

http://yoursite.com/yoursite.com/yoursite.com/yoursite.com/yoursite.com/yoursite.com

I believe the first choice would be of interest to you. However, Google may just display the second. There would have been no misunderstanding if you had avoided duplicating material in the first place, and the user would have only seen the best and most branded version of your URL.

3. Using up the resources of search engine crawlers

If you know how crawlers work, you know that Google sends its searching meta robots to scan your site based on the frequency with which you post new content.

Consider this scenario: Google crawlers come to your site and crawl five URLs, all of which offer identical content.

Crawler cycles are lost when search bots discover and index the same information on different parts of your site. The search bots will not crawl over your new content if you understand duplicate content.

These crawler cycles may have been used to crawl and index any newly published content on your site. This will not only waste crawler resources but will also negatively impact your SEO.

How can you identify your duplicate content?

Method 1: Perform a basic Google search.

A simple Google search is the simplest technique to uncover duplicate content issues on your website. Simply do a search for a keyword that you rank for and see the results. If Google returns a non-user-friendly URL for your material, you’ve got duplicate content on your hands.

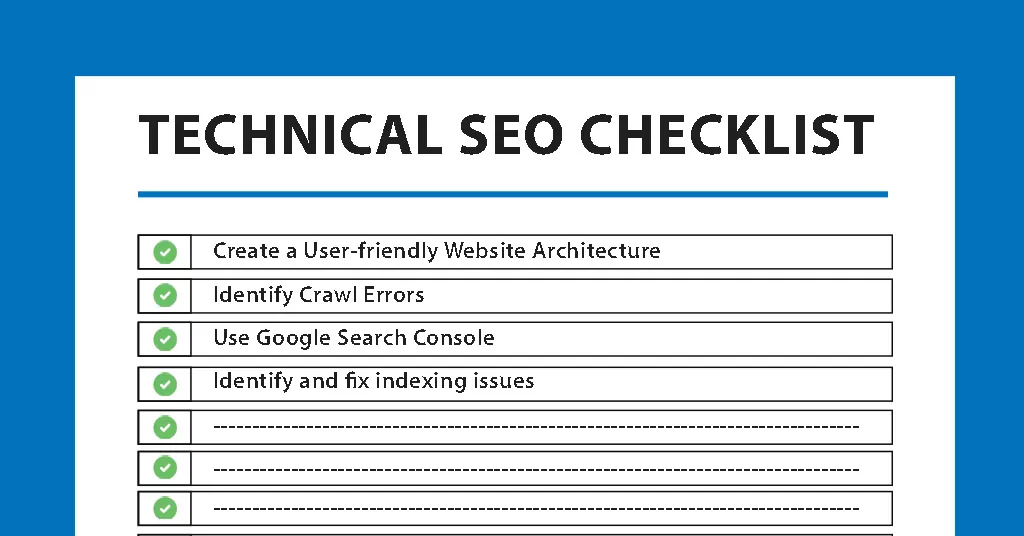

Method 2: Check Google Webmasters for notifications

The Google Search Console webmaster tool will also notify you if your site contains duplicate material. Log in to your Google Webmasters account to see if you have any duplicate content alerts. You can simply click this link if you’re already logged in.

Method 3: Go to your Webmasters dashboard and look at the Crawler analytics

The number of pages crawled by Google crawlers on your site is displayed in the crawler metrics. You may be utilizing inconsistent URLs or anchor text, or you may not be using rel canonical tags if crawlers are browsing and indexing hundreds of pages on your site while you only have a few. As a result, search engine crawlers are crawling the same information at different URLs several times.

Log in to your Google Webmasters account and select the Crawl option from the left side to see the Crawler metrics. Select the Crawl Stats option from the expanded menu.

Method 4: Look for content chunks

This is a basic way, but if you believe that your content is being copied on other sites or blog posts, or that it is available in multiple locations on your site, you may try it. Make a quick Google search using a random text block from your content. Remember to avoid extended paragraphs because they will cause an error. Choose a two- to three-sentence paragraph and search for it on Google. If other sites are presenting your content in the search results, you’re most likely a victim of plagiarism.

Duplicative content can and should be eliminated. Duplicate content is a common occurrence. There are many sites with more than 1,000 pages that don’t have some sort of duplicate content issue. It’s something you’ll have to keep an eye on all the time, but it’s fixable, and the benefits can be substantial. Simply removing duplicate content from your site, your excellent content could soar in the ranks. When a startup or small business uses top-notch SEO services, it won’t have any trouble meeting its short-term objectives.

In this digital age, it is critical for any corporate organization, regardless of size or scale, to have a strong online presence. Even a startup or small-size firm won’t find it difficult to quickly accomplish its short-term goals when it avails top-notch SEO services.