Everything you need to know about how search engine indexing works?

Table of contents

There is little doubt that SEO is taking longer to deliver results than ever before (assuming you fit Google’s guidelines as we do at Totally).

At the same time, however, the worth of being top of organic search has never been greater, 57% of B2B marketers say that SEO generates more leads than the other marketing options. This is why this industry has rapidly grown in the recent years the SEO/Search industry has grown at such a rapid rate in recent years.

As more and more websites are developed and compete for attention, search engines have had to stay up with this growth while still specializing in delivering the foremost relevant content for a user say the Jacksonville SEO experts. As a result, it’s important to own a firm grounding in how search works so as to confirm your site is as program friendly as possible.

At a basic level, there are three key processes in delivering search results I’m visiting today; crawling, indexing and ranking.

In this article we will learn about:

- What Is Computer Program Indexing?

- What is PageRank?

- How PageRank flows through pages?

- How Does a Pursuit Engine Index a Site?

- How To Get A Page Indexed Faster?

- 4 Tools for computer program Indexing

What Is Computer Program Indexing?

Search engine indexing refers to the method where an exploration engine (such as Google) organizes and stores online content in an exceedingly central database (its index). The program can then analyze and understand the content, and serve it to readers in ranked lists on its computer program Results Pages (SERPs).

Before indexing an internet site, a pursuit engine uses “crawlers” to research links and content say the experts from Jacksonville SEO Company. Then, the computer program takes the crawled content and organizes it in its database.

We’ll look closer at how this process works within the next section. For now, it can help to consider indexing as an internet file system for website posts and pages, videos, images, and other content. When it involves Google, this method is an unlimited database referred to as the Google index.

What is PageRank?

“PageRank” may be a Google algorithm named after the co-founder of Google, Larry Page (yes, really!) It’s a price for every page calculated by counting the number of links pointing at a page so as to see the page’s value relative to each other page on the net. The worth gone by each individual link is predicated on the amount and value of links that point to the page with the link.

PageRank is simply one of all the various signals used within the big Google ranking algorithm.

An approximation of the PageRank values was initially provided by Google but they’re now not publicly visible.

While PageRank could be a Google term, all commercial search engines calculate and use identical link equity metrics. Some SEO tools use their own logic and calculations try to give an estimation of PageRank. For instance, Page Authority in Moz tools, TrustFlow in Majestic, or URL Rating in Ahrefs. DeepCrawl includes a metric called DeepRank to live the worth of pages supporting the interior links within an internet site.

How PageRank flows through pages?

Pages pass the PageRank, or link equity, through to other pages via links. When a page links to content elsewhere it’s seen as a vote of confidence and trust, in this, the content being linked to is being recommended as relevant and useful for users. The count of those links and therefore the measure of how authoritative the linking website determines the relative PageRank of the linked-to page.

PageRank is equally divided across all the discovered links on the page. For instance, if your page has five links, each link would pass 20% of the page’s PageRank through each link to the target pages. Links that use the rel=”nofollow” attribute don’t pass PageRank.

How Does a Pursuit Engine Index a Site?

Search engines like Google use “crawlers” to explore the online content and categorize it. These crawlers are software bots that follow links, scan web pages, and gain the maximum amount of data from a couple of websites as possible. Then, they deliver the data to the search engine’s servers to be indexed.

Every time content is published or updated, search engines crawl and index it to feature its information in their databases. This process can happen automatically, but you’ll be able to speed it up by submitting sitemaps to go looking for engines. These documents outline your website’s infrastructure, including links, to assist search engines to crawl and understand your content more effectively.

Search engine crawlers care for a “crawl budget.” This budget limits what percentage of pages the bots will crawl and index on your website within a group period. (They do come, however.)

Crawlers compile information on essential data like keywords, publish dates, images, and video files. Search engines also analyze the link between different pages and websites by following and indexing internal links and external URLs.

Note that program crawlers won’t follow all of the URLs on an internet site. they’re going to automatically crawl dofollow links, ignoring their nofollow equivalents. Therefore, you’ll want to specialize in dofollow links in your link-building efforts. These are URLs from external sites that time your content.

If external links will pass their “link juice” when crawlers follow them from another site to yours when they are of high-quality say the Jacksonville SEO experts. As such, these URLs can boost your rankings within the SERPs.

Furthermore, remember that some content isn’t crawlable. If your pages are hidden behind login forms, and passwords, otherwise you have text embedded in your images, search engines won’t be ready to access and index that content. (You can use alt text to possess these images that appear in searches on their own, however.)

How To Get A Page Indexed Faster?

But, what if you would like Googlebot to push to your page faster?

This can be important if you have got timely content or if you’ve made a vital change to a page you would like Google to grasp about.

I use faster methods when I’ve optimized a critical page or I’ve adjusted the title and/or description to enhance click-throughs. I need to grasp specifically after they were picked up and displayed within the SERPs to understand where the measurement of improvement starts.

In these instances, there are some additional methods you’ll be able to use.

1. XML Sitemaps

XML sitemaps are the oldest and generally reliable thanks to a research engine’s attention to content.

An XML sitemap gives search engines an inventory of all the pages on your site, further as additional details about it, like when it absolutely was last modified.

A sitemap will be submitted to Bing via Bing Webmaster Tools and it may also be submitted to Google via Search Console.

Definitely recommended!

But after you need a page indexed immediately, it’s not particularly reliable.

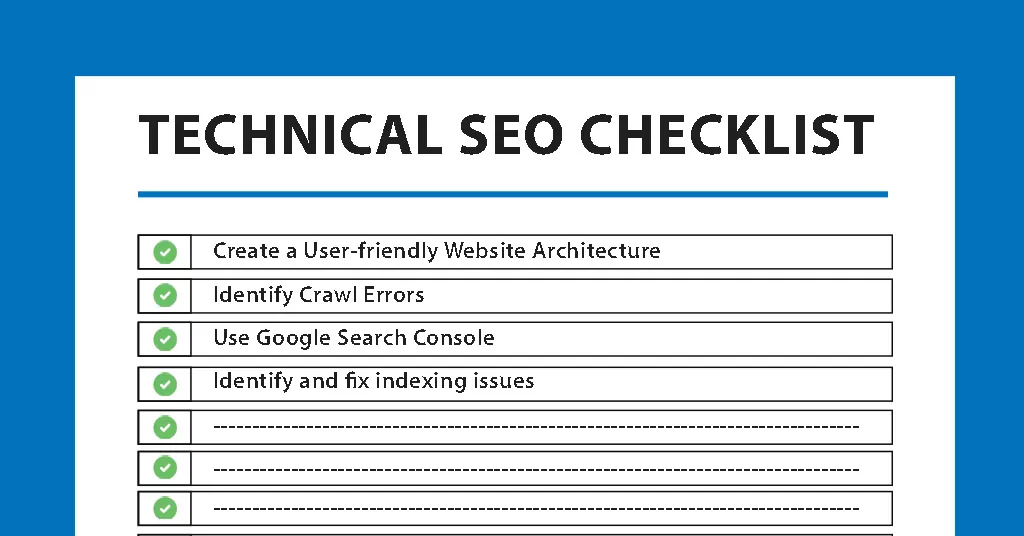

2. Request Indexing With Google Search Console

In Search of Console, you’ll be able to “Request Indexing.”

You begin by clicking on the highest search field which reads by default, “Inspect and URL in domain.com.”

Enter the URL you wish to be indexed, then hit Enter.

If the page is already known to Google, you’ll be presented with a bunch of knowledge on that. We won’t get into that here but I like to recommend logging in and seeing what’s there if you haven’t already.

The important button, for our purposes here, appears whether the page has been indexed or not – meaning that it’s good for content discovery or simply requesting Google to grasp a recent change.

You’ll find the button as shown below.

Within seconds to some minutes, you’ll search the new content or URL in Google and find the change or new content picked up.

The Fastest thanks to Check Your Core Web Vitals

3. Participate In Bing’s IndexNow

Bing has an open protocol that supports a push method of alerting search engines of the latest or updated content.

This new computer program indexing protocol is termed IndexNow.

It’s called a push protocol because the concept is to alert search engines using IndexNow about new or updated content which can cause them to return and index it.

An example of a pull protocol is the old XML Sitemap way that depends on a look engine crawler to make a decision to go to and index it (or to be fetched by Search Console).

The advantage of IndexNow is that it wastes less web hosting and data center resources, which isn’t only environmentally friendly but it saves on bandwidth resources.

The biggest benefit, however, is quicker content indexing.

4. Bing Webmaster Tools

The info provided within is substantial and can facilitate your better assess problem areas and improve your rankings on Bing, Google, and anywhere else – and doubtless provide a far better user experience furthermore.

But for getting your content indexed you just must click: Configure My Site > Submit URLs.

From there you enter the URL(s) you wish to index and click on “Submit.”

4 Tools for computer program Indexing

Let’s take a look at some of the foremost helpful options!

1. Sitemaps

Keep in mind that there are two types of sitemaps: XML and HTML. It may be easy to confuse these two concepts since they’re both kinds of sitemaps that end in -ML, but they serve different purposes.

HTML sitemaps are the user-friendly files that list all the content on your website. for instance, you’ll typically find one {in all|one amongst|one in every of} these sitemaps in a site’s footer. Scroll all the way down on Apple.com, and you’ll find this, an HTML sitemap.

This sitemap eases the site navigation. It acts as a general directory, and it can positively influence your SEO and supply a solid user experience (UX).

In contrast, an XML sitemap contains an inventory of all the essential pages on your website. You submit this document to look engines in order that they can crawl and index your content more effectively.

Keep in mind that we’ll be concerning XML documents after we discuss sitemaps during this article. We also recommend trying out our guide to making an XML sitemap, so you’ve got the document ready for various search engines.

2. Google Search Console

If you’d prefer to focus your SEO efforts on Google, the Google Search Console is an important tool to master.

In the console, you’ll be able to access an Index Coverage report, which tells you which of these pages are indexed by Google and highlights any issues during the method. Here you’ll be able to analyze problem URLs and troubleshoot them to create them “indexable”.

Additionally, you’ll be able to submit your XML sitemap to Google Search Console. This document acts as a “roadmap,” and helps Google index your content more effectively. On top of that, you’ll be able to ask Google to recrawl certain URLs and parts of your site so updated topics are always available to your audience without waiting on Google’s crawlers to form their way back to your site.

3. Alternative program Consoles

Although Google is the hottest program, it isn’t the sole option. Limiting yourself to Google can close off your site to traffic from alternative sources like Bing.

We recommend trying out our guides on submitting XML sitemaps to Bing Webmaster Tools and Yandex Webmaster Tools.

Keep in mind that every one of those consoles offers unique tools for monitoring your site’s indexing and rankings within the SERPs. Therefore, we recommend trying them out if you would like to expand your SEO strategy.

4. Robots.txt

We’ve already covered how you’ll be able to use a sitemap to inform search engines to index specific pages on your website. Additionally, you’ll be able to exclude certain content by employing a robots.txt file.

A robots.txt file contains indexation information about your site. It’s stored within your root directory and has two lines: a user-agent line that specifies a probe engine crawler, and a disallow directive that blocks particular files.

You simply have to create an easy document and name it robots.txt. Then, add your disallow data and upload the file to your root directory with a File Transfer Protocol (FTP) client.

SEO may be a broad field that covers everything from program algorithms to off-page optimization techniques. If you’re unaccustomed to the subject, you may be feeling overwhelmed by all the knowledge. Fortunately, indexing is one of the simpler concepts to know.

Search engine indexing is a necessary process that organizes your website’s content into a central database. computer program crawlers analyze your site’s content and architecture to categorize it. Then they will rank your pages in their results pages for specific search terms.

Search Engine Indexing may be a process within which the documents are parsed to make Tokens to be saved in an infinite database called Index. The index contains the words similarly because of the list of documents where the words are found. This helps to supply an efficient response to the user search queries.