Robots.txt for SEO: The complete guide

Table of contents

Robots.txt is a text file used to prevent web crawlers from accessing files and folders on the server that the webmaster does not want to appear in search engines. Without specific instructions, search engine crawlers access every folder and their associated files on the server, which increases the possibility that some private information will become public if proper commands are not specified in the robots.txt file state the Jacksonville SEO experts. This file must also be present only on the root server.

Robots.txt is a notepad file that contains commands that allow or deny web robots access to specific files or folders. When crawlers access a web server, the first file they read is robots.txt. However, it is essential to note that some web crawler programs may disregard the instructions in the robots.txt file and may also crawl private files and folders. However, robots generally follow the commands specified in the file.

In this article, we’ll cover:

- What is a Robots.txt file?

- What are the five major parameters of the Robots.txt file?

- What is the syntax of Robots.txt?

- How to make a robots.txt file?

- How to check if the robots.txt is working?

- How to find the Robots.txt file?

- What are the Non-standard robots.txt crawl directives?

- What are the Robots.txt best practices?

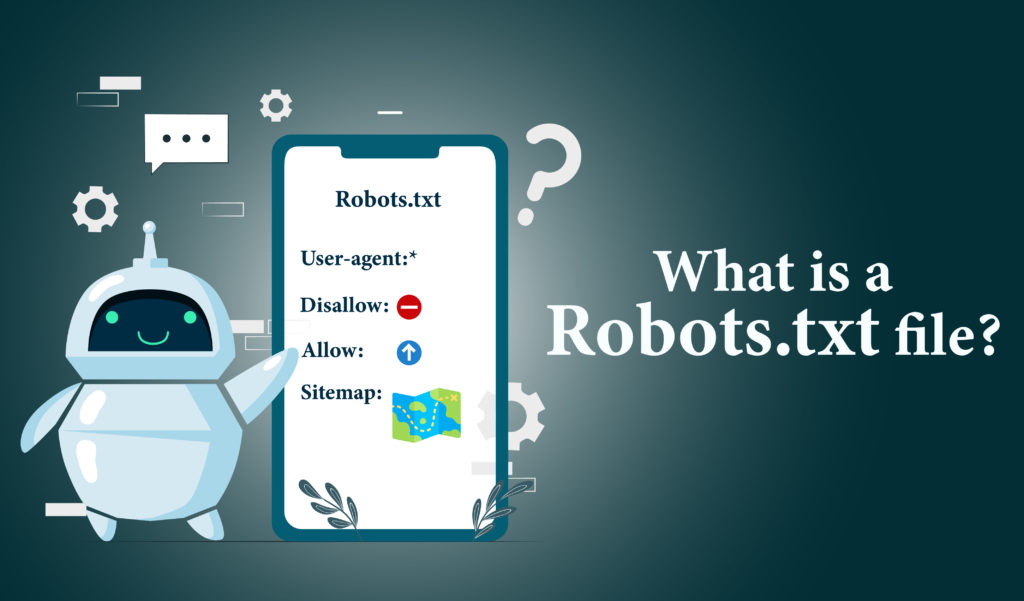

What is a Robots.txt file?

A robots.txt file is a text file that search engines read. The robots.txt file, also well-known as the Robots Exclusion Protocol, results from an agreement among early search engine developers. It is not an official standard set by any standards organization, but all major search engines follow it. Robots.txt is mainly used to manage crawler traffic to your site and, depending on the file type, to keep a page off Google.

For example, if you don’t want Google to index a specific page on your site, you can use robots.txt to prevent Googlebot (Google’s web crawler) from crawling that page.

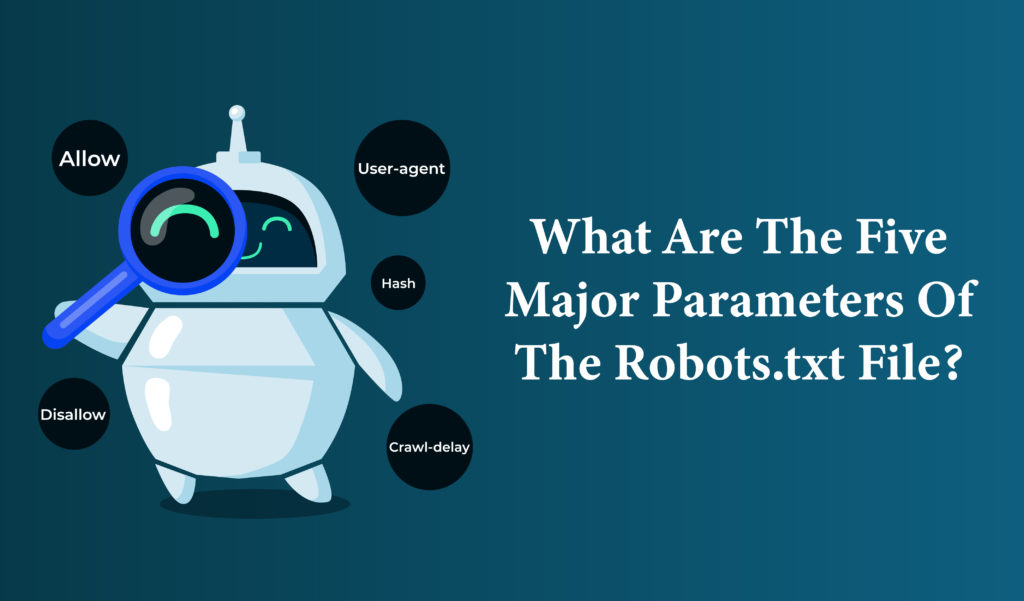

What are the five major parameters of the Robots.txt file?

The Robots.txt file consists of five major parameters that are mentioned below:

- User-agent: This field contains the robot value.

- Disallow: This field specifies the file and folder names/paths that crawlers must ignore.

- Hash: This is a comment parameter. You can use the hashtag to include lines of text in the file that you do not want to execute.

- Allow: It is the inverse of disallowing. This allows you to crawl all files and folders.

- Crawl-delay: This parameter specifies how long to wait between requests to the same server.

What is the syntax of Robots.txt?

Robots.txt is incomplete without syntax. Jacksonville SEO experts has highlighted the syntax used in Robots.txt for all its users.

- USER-AGENT

Robots.txt is made up of text blocks. Each block begins with a User-agent string and groups directives (rules) for a particular bot.

There could be hundreds of crawlers attempting to access your website. As a result, depending on their intentions, you may want to set different boundaries for them. A user-agent is a text string that identifies a specific bot. Every directive must include the user-agent line. Consider it as addressing bots by name and giving them a particular command. Until a new User-agent is specified, all directives that follow a User-agent will be directed at the defined bot.

- DIRECTIVES

Directives are rules that you set for search engine bots. Each text block may contain one or more directives. Each directive must begin on its line. There’s also an unofficial noindex directive, which indicates that a page should not be indexed. Use the noindex Meta Robots Tag or X-Robots-Tag header to prevent some pages from being indexed. The directives are:

- Disallow: This directive tells the crawler which pages should not be crawled. By default, search engine bots can crawl any page that is not blocked by the disallow directive. To restrict access to a specific page, you must define its path in the root directory.

- Allow: Allowing the crawling of a page in an otherwise forbidden directory is possible with the allow directive.

- Sitemap: The sitemap directive specifies where your sitemap should be placed. You can include it at the start or end of your file and define multiple sitemaps. Unlike other directives, always include the full URL of your sitemap, including the HTTP/HTTPS protocol or www/non-www version. The sitemap directive is optional but highly recommended. It’s always a good idea to include it in your robots.txt file to help search engine bots find it faster.

- Crawl-delay: Search engine bots can crawl a large number of your pages in a short period. Each crawl consumes some of your server’s resources. If you have an enormous website with many pages, or if opening each page requires a large number of server resources, your server may be unable to handle all requests. As a result, it will become overloaded, and users and search engines may experience temporary outages. This is where the Crawl-delay directive can help slow the crawling process. The Crawl-delay directive’s value is specified in seconds. You can choose between 1 and 30 seconds.

- WILDCARDS

Wildcards are special characters that can be used as placeholders for other symbols in the text, making the process of creating the robots.txt file easier say the experts from Jacksonville SEO Company. Except for the sitemap, you can use wildcards in any directive. They are as follows:

- An asterisk *: The asterisk can be used to replace any string. The asterisk in the User-agent line indicates that all search engine bots are specified. As a result, any directive that follows it will be directed at all crawlers.

- The dollar signs $: The dollar sign denotes a specific element corresponding to a URL’s end.

- COMMENTS

In your robots.txt file, you can add comments using the hash # character at the origin of a line or after a directive. Search engines overlook everything that follows the # in the same line. Humans use comments to explain what a specific section means. Including them is always a good idea because they’ll help you understand what’s going on faster the next time you open the file.

How to create a robots.txt file?

It’s simple to add a robots.txt file to your website if it doesn’t already have one. A robots.txt file can be formed with any text editor. If you have a Mac, you can use the TextEdit app to make your robots.txt file. Start typing directives in the text document. Save the file as “robots.txt” once you’ve typed all the directives.

If you want to avoid making syntax mistakes when creating your robots.txt file, SEO Company Jacksonville recommends using a robots.txt generator. Even minor syntax errors can cause your site to be deindexed, so ensure your robots.txt file is configured correctly. Once your robots.txt file is complete, upload it to your website’s root directory. You can use an FTP client to download the text file to the domain’s root directory.

How to check if the robots.txt is working?

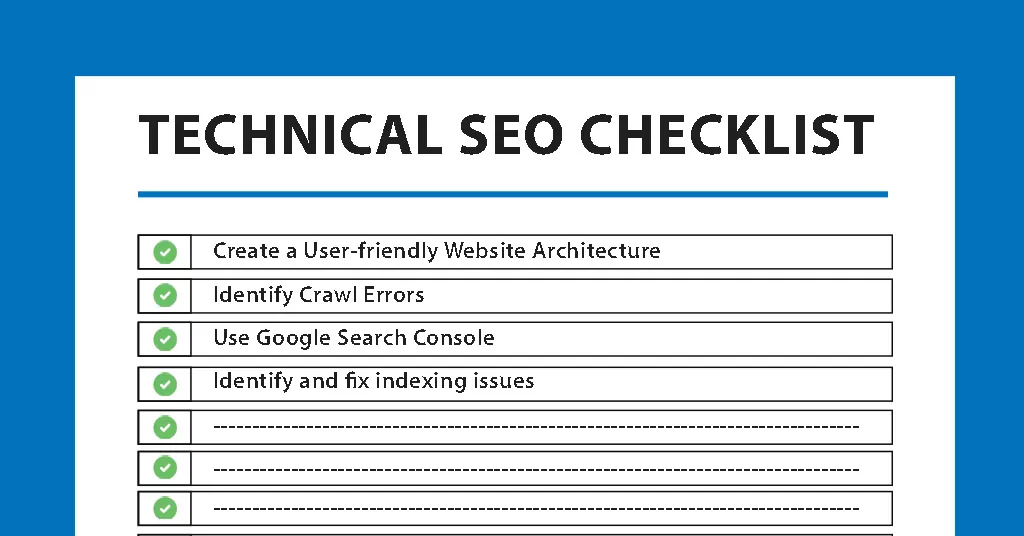

Once you’ve uploaded your robots.txt file to your root directory, you can validate it in Google Search Console using the robots.txt Tester.

The robots.txt Tester tool will determine whether or not your robots.txt file is functioning correctly. If you’ve blocked any URLs from being crawled in your robots.txt file, the Tester tool will check to see if web crawlers are indeed blocking the specific URLs. Just because your robots.txt has been validated once does not mean it will be error-free indefinitely.

Errors in Robots.txt are pretty standard. A poorly configured robots.txt file can impair your site’s crawl ability. Google Search Console is the most effective way to check your robots.txt for issues. Log in to your Google Search Console account and go to the “Index” section to find the “Coverage” report. If there are any errors or warnings in your robots.txt file, they will be listed in the “Coverage” report.

How to find the Robots.txt file?

Open a blank.txt file and begin typing directives. Continue to add directives until you’re satisfied with what you have. Make a copy of your file and save it as “robots.txt.” You can also use a robots.txt generator, such as this one.

The benefit of using such a tool is that it reduces syntax errors. That’s good because one mistake could lead to an SEO disaster for your site, so it’s better to be safe than sorry. The disadvantage is that their customizability is somewhat limited.

What are the Non-standard robots.txt crawl directives?

- The allow directive

There are a couple of other crawl directives you can use in addition to the Disallow and User-agent directives. Because not all search engine crawlers support these directives, ensure you understand their limitations. While it was not in the original “specification,” there was early discussion of an allow directive. Without an allow directive, the only way to achieve the same result would be to disallow every file in the wp-admin folder explicitly.

- The crawl-delay setting

Crawl-delay is an unofficial addition to the standard that few search engines follow. At the very least, Google and Yandex do not use it, and Bing is unclear. Crawlers can be crawl-hungry, so you could try the crawl-delay direction to slow them down.

A line like the one below would instruct those search engines to alter the frequency with which they request pages from your website. Use the crawl-delay directive with caution. You limit these search engines to 8,640 pages per day by specifying a crawl delay of ten seconds. This may appear to be sufficient for a small site but insufficient for large sites. On the other hand, receiving very little traffic from these search engines may be a good way to save bandwidth.

- The XML Sitemaps sitemap directive

You can use each search engine’s webmaster tool to submit your XML sitemaps. We strongly advise you to do so because webmaster tools will provide you with a wealth of information about your site. If you don’t want to do that, adding a sitemap line to your robots.txt file is a quick fix. If you let Yoast SEO generate a robots.txt file, it will automatically add a link to your sitemap. You can manually add the rule to an existing robots.txt file using the file editor in the Tools section.

What are the Robots.txt best practices?

The best practices of Robots.txt are:

- Robots.txt should not be used to block JavaScript or CSS files. Bots may not render your content correctly if these resources are unavailable.

- Make sure to include the link in your sitemap so that all search engine bots can easily find it.

- The interpretation of robots.txt syntax will vary depending on the search engine. If unsure, always double-check how a search engine bot treats a specific directive.

- When using wildcards, use caution. If you abuse them, you may accidentally block access to an entire section of your website.

- Use robots.txt to prevent access to your private content.

- If you want to secure your page, you should use a password. Furthermore, the robots.txt file is publicly accessible, and you risk disclosing the location of your private content to malicious bots.

- Disabling crawler access will not remove your site from the search results page. Your page can still be indexed if there are many links with descriptive anchor text pointing to it. If you want to avoid this, use the Meta Robots Tag or X-Robots-Tag header instead.

Here is everything you need to know about how search engine indexing works, that will give you more clarity.

Robots.txt is a simple text file that serves as a powerful SEO tool. An optimized robots.txt file can improve your pages’ indexability and raise your site’s visibility in search results. The robot.txt file is an essential component of SEO recommendations. Some webmasters regard it as redundant optimization. However, it improves the efficiency of crawling and indexing your website. It prevents web crawlers from getting stuck in the internal link loop. To generate the correct code, however, a careful approach is required.

Jacksonville SEO Company advises you to remember a few things like the noindex meta tag instructs crawlers not to index the page’s contents. Crawlers will read the page’s contents and pass any link juices they find, but they will not index the page. Because the robots.txt file blocks the search engines, there is a chance that the URL will be crawled and displayed in the search results without any snippet. The best way to remove URLs from the Google index is to use Noindex meta tags or the Google URL removal tool. In the robots.txt file, each URL can only have one “Disallow:” line. If you have multiple subdomains on the site, each one will require its robots.txt file.